- PLATFORMKepler PlatformAI TECHNOLOGIES

- WHY KEPLERUSE CASESBY ROLE

- PARTNERS

- INSIGHTSInsights

- COMPANY

Deep Learning is one of the more successful approaches to Machine Learning, capable of solving problems involving unstructured data or a large number of features with minimal user intervention.

Deep Learning is one of the more successful approaches to Machine Learning. Deep Learning uses artificial neural networks inspired by the human brain and it has the ability to automatically discover the best representations of the input data required for the task at hand. This ability, in conjunction with the ability to understand very complex and feature-rich patterns, makes Deep Learning an excellent approach for solving problems involving unstructured data or a large number of features with minimal user intervention.

Traditional Machine Learning algorithms are beneficial when you have structured data and well-engineered features. Still, they’re limited because a human expert needs to select and transform raw data into features, or attributes that better represent an underlying problem.

Feature engineering is complex. It requires a significant amount of domain knowledge and a serious time investment – and the task becomes almost impossible when you have vast amounts of data added in a constant stream.

There are situations where Deep Learning is useful, and human manipulation is unrealistic:

When there is a large number of features

When there is a lack of domain understanding

There is a complex relationship between the features and the goal

When the data is unstructured like text, audio, images, or video

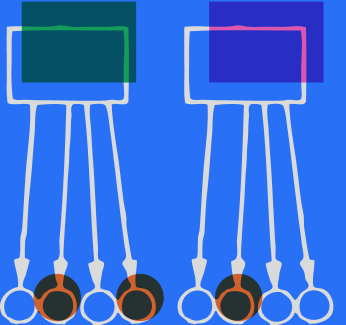

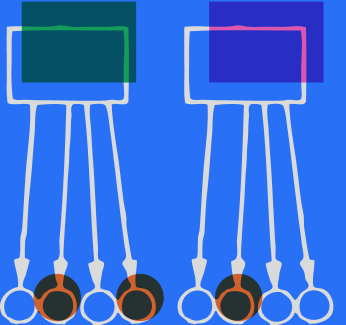

Deep Learning neural networks contain layers of nodes designed to behave like a human brain’s neurons. Nodes are interconnected, and signals traveling between them are assigned a number or “weight,” with a heavier weight exerting more effect on the next layer of nodes.

In Deep Learning, a machine learns to filter data through multiple layers – an input layer that receives input objects like text, images, or sounds, multiple hidden layers that compute and transform the data, and an output layer that assigns a particular outcome or prediction. “Deep” means there is more than one hidden layer.

Each layer transforms data from its original representation to a new representation that retains the important information to give the desired answer. This way, very complex data can be reduced to a smaller set of features that are critical to classify or predict. This property is called representation learning.

Two popular Deep Learning algorithms are Convolutional Neural Networks (CNN) used for object detection and image processing and Recurrent Neural Networks that “remember” past data to inform how they understand current events or predict the future.

Kepler automated pipeline builder capabilities not only choose the right Deep Learning architecture for your task but select the correct data preprocessing steps in order to maximize performance.

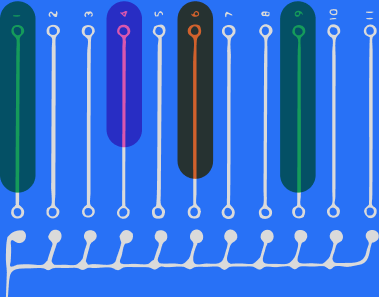

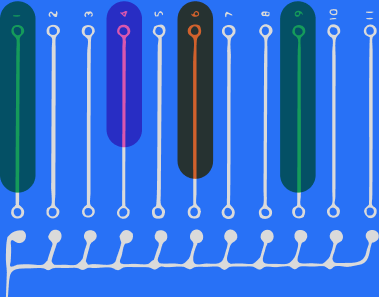

Kepler has multiple Deep Learning architectures from custom 1D and 2D Convolutional Neural Networks (CNN), Long Short Term Memory Networks (LSTM), AutoEncoders, Information Maximizing Self-Augmented Training (IMSAT), Pre-trained Resnet50, Pre-trained VGG, Pre-trained BERT, and Fully Connected Neural Networks.

Kepler offers Deep Learning capabilities to predict categories or quantities, forecast time series, segment data, or to detect anomalies.

Kepler Deep Learning algorithms are trained using GPUs to better manage compute requirements and to increase training speed.

AI primer on key data science terms and processes

Applied AI training on how to run a successful AI project

AI roadmap advice

How to pilot AI use cases